If humans could speak with animals, what could we learn from them, and could we better protect them from environmental challenges? Well, that’s what one ambitious, multi-disciplined project is trying to find out.

Project CETI (Cetacean Translation Initiative) is exploring ways to unlock the secrets of whale song, especially that of the sperm whale. It has long been known that sperm whales use a complex combination of clicks, known as codas, to communicate with each other over large distances, however researchers are only now starting to understand their full complexity. In 2017, CETI discovered that sperm whale song could in fact be similar to Morse code, with the clicks being used to communicate increasingly complex messages.

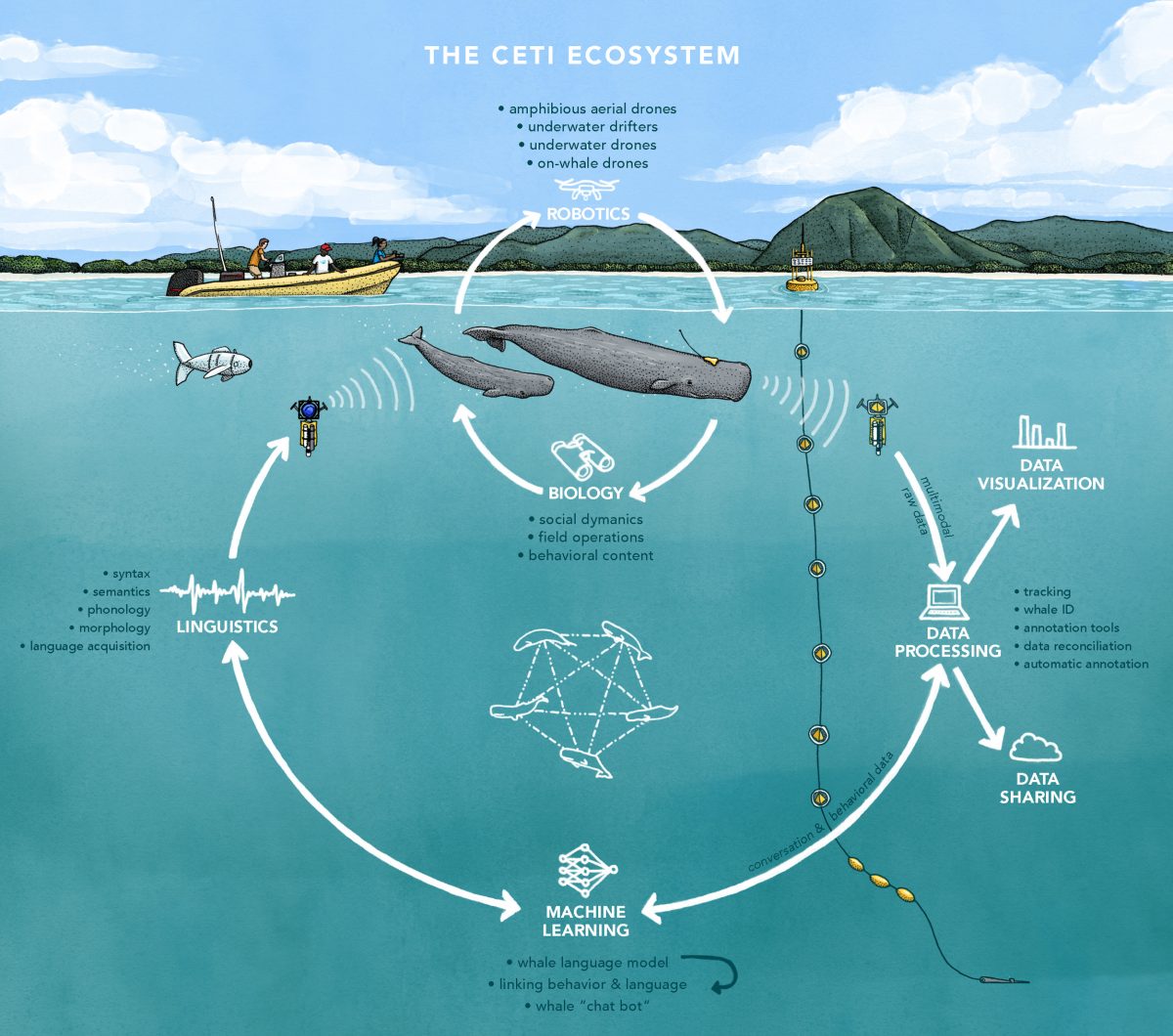

The CETI team hopes to bring together a wide range of skills to decode this ‘language’ and perhaps even communicate back to the whales. Initially, biologists work with roboticists to collect audio recordings of whales using suction-cup mounted computers, underwater microphones and small swimming robots. These recordings are passed through an artificial intelligence machine learning algorithm which can break down the sounds and make predictions about their meaning. This information is then passed onto linguists who attempt to form their own calls to be played back to whales.

It is suggested that if humans can fully understand sperm whales, new strategies can be developed to better protect the critically endangered species. As well as potentially providing information about their needs and feelings (sperm whales are known to exhibit a wide range of emotions), understanding whales could also provide a huge boost in public support and interest in conservation projects. Indeed, the discovery of whale sound in the 1960s was one of the main contributors to the wide-spread “Save the Whales” movement of the decades that followed.

However, learning to ‘speak’ sperm whale comes with some significant hurdles. Firstly, a large dataset is required to train the AI algorithm. This means a huge library of sperm whale sounds needs to be collected and catalogued. Considering sperm whales’ deep underwater homes, massive migration routes and reclusive nature, this is easier said than done. Furthermore, there is still significant debate about if animals even use ‘language’ in any way remotely similar to human speech.

Do Animals ‘Talk’?

For decades, the mainstream scientific view has been that language is a fundamentally human concept. Experts such as Konrad Lorenz – one of the pioneers of animal behaviour research – suggested that animal vocalisations, although designed to signal intent or psychological state, fall far short of what we understand as language. Animals communicate, but they do not talk. Additionally, he suggested that animal calls had more in common with human facial expressions, in that they are innately understood from an early age but do not evolve or change over time.

More recent research, however, is starting to question some of these long established beliefs. For example, insights into bird calls suggests they learn and develop new calls in response to their environment, and that specific calls have specific meanings. Some researchers have even suggested this is the fundamentals of ‘grammar’, while others have detected regional ‘dialects’ and ‘cultures’ amongst separated populations of the same species. Research into bird calls has also suggested their vocabulary is changing in response to environmental pressures and increased urbanisation, with some bird calls being ‘lost’.

Ofcourse, the communicative ability of animals also varies hugely between species and their relation to humans. Many animals, especially those which live largely solitary lives, likely have little need for language. It is also important to note that human language is primarily auditory or written, but animal ‘speech’ may also involve subtle and complex expressions or smells imperceptible to humans. For example one AI project, DeepSqueek, is looking to detect ultrasonic rodent sounds imperceptible to the human ear.

Marine mammals, such as dolphins and whales, are particularly ideal candidates for research due to the complexity of their sounds and high intelligence. Their inability to physically emote like other animals, combined with the vast distances over which they communicate also likely means ‘language’ is an important part of their everyday lives and survivability.

Humpback whales especially have shown emotional intelligence, even bordering on empathy, and have been spotted aiding other animal species from predators. Although this could be explained in a basic animalistic context (depriving a rival species of food benefits them), it’s also possibly indicative of a more complex understanding of their environment and even lofty concepts such as altruism.

By unlocking some of these communication secrets, using the latest advances in robotics and digital tools, the CETI team hopes to create a more equitable relationship between humans and our animal neighbours, as well as devise new strategies to promote conservation.

Translating animal sounds is not the only way AI is helping to understand the needs and behaviour of animals. We recently covered an image recognition AI which can not only identify individual animals of the same species, but also make estimations about their health and state of mind.

Although this technology has the potential to greatly aid conservation research and unlock a new understanding of our animal neighbours, it also comes with potential drawbacks. Some experts have suggested that conquering animal communication could allow humans to simply dominate and exploit animals further, while anthropomorphising them with human-like qualities can also be detrimental. Fundamentally, animals are not human, they have specific and complex non-human environmental needs. Turning animals into an emoting, ‘speaking’ entity might draw attention away from their very real everyday survival requirements.

Furthermore, using technology and image recognition software to detect an individual and even estimate their state of mind opens up some potentially troubling possibilities, not only for animals, but for us humans too. Already controversy is developing around the use of facial recognition software in stores to detect known shoplifters. Will technology soon be able to identify ‘criminal intent’ in someone’s subtle movements before a crime has even been committed?

Fundamentally, it’s also worth considering that even if we could ‘talk’ to an animal, could we even understand each other? Our frames of reference are so completely different that it might be an exercise in frustration for both sides. As the Austrian-British philosopher Ludwig Wittgenstein once wrote: “If a lion could speak, we could not understand him.”

The post Can AI Computing Let Us ‘Talk’ to Sperm Whales? appeared first on Digital for Good | RESET.ORG.