Facebook groups reportedly have the same problem identified earlier on Instagram

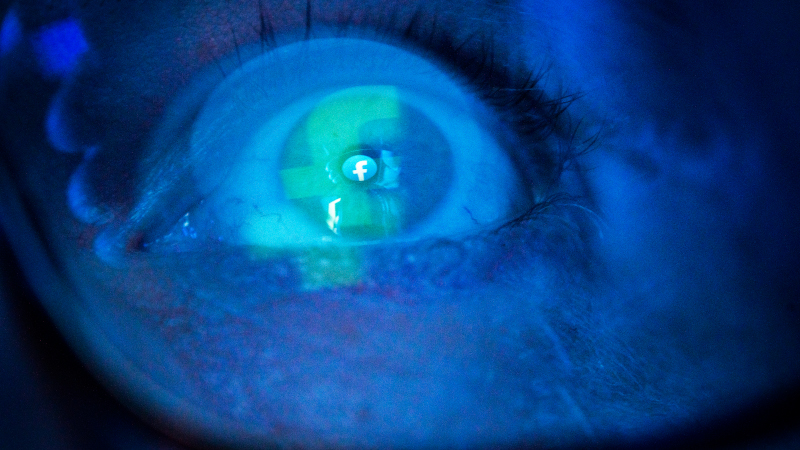

The parent company of Facebook and Instagram continues to struggle with its algorithms enabling child molesters on its platforms, the Wall Street Journal reported on Friday.

Earlier this year, the Journal and researchers at two US universities revealed that Instagram algorithms were helping connect accounts “devoted to the creation, purchasing and trading of underage-sex content.” Meta responded by setting up a child-safety task force and developing software tools to deal with the issue.

Five months later, the company is “struggling to prevent its own systems from enabling and even promoting a vast network of pedophile accounts,” the Journal noted.

Earlier this week, the Journal cited research by the Canadian Centre for Child Protection, showing that Instagram algorithms still recommended pedophile content. While some pedophilia-related hashtags have been banned, the system just spits out new ones with minor variations, they said.

According to the Canadians, a “network” of Instagram accounts that had up to 10 million followers apiece “has continued to livestream videos of child sex abuse months after it was reported to the company.” Another group, the Stanford Internet Observatory, flagged several groups “popular with Instagram’s child sexualization community” to Meta back in June, and said that some of them are still operating.

Moreover, the problem also affects Facebook Groups, a key feature of the platform that has more than three billion monthly users worldwide. In one instance, when Journal reporters flagged a group named ‘Incest,’ they received a response that it “doesn’t go against our Community Standards.” The group was removed only after the outlet brought this to the attention of Meta’s public relations department.

“We are actively continuing to implement changes identified by the task force we set up earlier this year,” a Meta spokesperson was quoted as saying. The task force has numbered more than 100 employees at times, and has banned thousands of hashtags used by pedophiles, as well as improved technology to identify nudity and sexual activity in videos broadcast live.

Mark Zuckerberg’s social media behemoth also said it had disabled “thousands” of accounts, “hidden 190,000 groups” in Facebook’s search results, and invested in new software tools to better deal with the issue. The task force also sent a team to work with Meta’s content moderator contractors in Mumbai, India.

While Meta’s algorithms can connect users with “illicit interests” and tailor content to appeal to them, restricting or removing features that connect people with acceptable content would not be reasonable, a company spokeswoman told the Journal.

Vivek Ramaswamy joins The Alex Jones Show to talk about the populist movement making its way around the globe.